LLMs? The feeling is neutral

Innovation team

Innovation is about invalidating beliefs and assumptions. This is especially true when something is new, shiny and exciting. Last week we ran an internal survey at Torchbox to understand how the rest of the organisation felt about Large Language Models. The results surprised us.

A small group of us have been engaging heavily with LLMs and AI over the past months. We’ve all been coming from our own individual perspectives - some of us are busy building things, others are thinking of it through the lens of previous disruptions and what comes next, others have been getting deep into the ethics of these new tools. We assumed the rest of the company was as equally engaged as us.

The response was: meh.

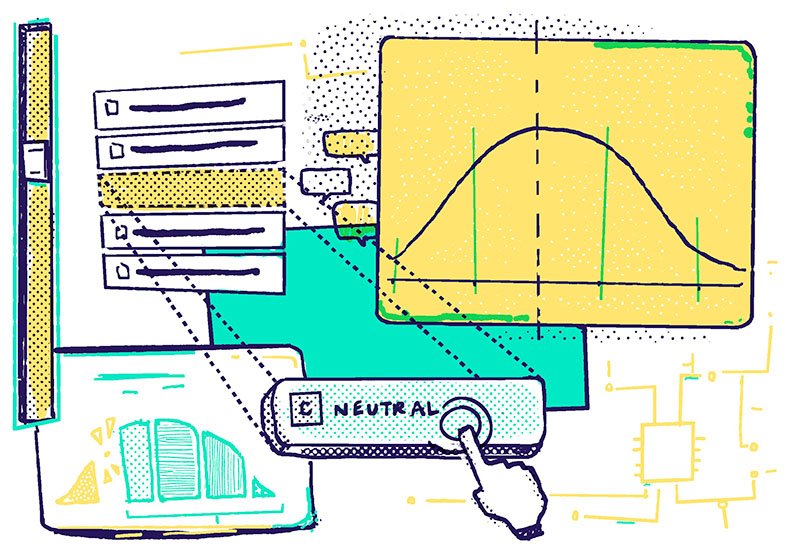

Specifically, whilst everyone felt Torchbox should adopt LLMs the majority for all questions tended towards the central (‘neutral’) response. This was a surprise, to say the least. A bigger surprise was how uncomfortable we were with the ethics of LLMs. Ethics have always been a key part of our innovation work but it was stark just how important they’ll be for us as a purpose-led company.

Back in the ’90s Geoffrey Moore wrote Crossing the Chasm. It’s a seminal book around why certain technology makes the jump from early adopters through to the early majority. It is written from the perspective of marketing a start-up, or new innovation, but is equally applicable to understanding how innovations are adopted within culture. When GPT3.5 was breaking all the records as the fastest product to gain 1 million, and then 100 million, users at the end of 2022 it looked like it had already crossed the chasm.

Our internal survey has made us change our view. LLMs look like they’re still going to conform to that adoption cycle. As an innovation team we’re now working on how to nourish an emergent strategy to tool adoption.

If you’re reading this I’d make the hypothesis that you’re my shape of Purple Cow with a tendency to pick up new tools just because they’re new. If that is the case I’d encourage you to set up a similar survey to get some data around how others in your organisation feel about LLMs.

We used a simple Likert scale, where each question had the possible response of:

- Strongly disagree

- Disagree

- Neither agree nor disagree

- Agree

- Strongly agree

And we asked the following five questions to understand sentiment:

- I am excited about {our organisation} adopting Large Language Models (LLMs)

- I believe LLMs offer valuable opportunities for {our organisation}

- I believe {our organisation} should adopt LLMs quickly

- I am comfortable with the ethics of using LLMs

- I am confident in my ability to use LLMs effectively

Drop us a line if you’d like to catch up on any of this. We’re working on an AI Maturity Matrix for purpose-led organisations that is aimed at supporting this conversation and understand if LLMs are right for your organisation.

If you’re interested in looking at data graphs the results from our survey are here

"

"

"

"