9 misconceptions about Large Language Models

Director of Innovation

I’ve been running workshops, trainings and webinars over the last month. These are the most common misconceptions that come up about what Large Language Models are and what they can do.

It makes sense for there to be lots of misconceptions. There’s lots of noise behind any new technology but even more so with technology that’s as disruptive as Large Language Models (LLMs).

Misconception 1

LLMs can be bias-free

LLM bias shows up in 3 ways

- Mimicking human falsehoods

- Mimicking human inaccuracies

- Outdated / limited data

Given that the design of LLMs is to simulate human language it is hard to see how those biases can be entirely eliminated. As people using the tools we need to ensure we have tools to reduce and mitigate those biases to avoid causing harm.

Misconception 2

LLMs are information machines

LLMs are limited to the data they were trained on. Sam Altman - founder of OpenAI - is on record as saying we should consider them as ‘reasoning machines’. They can paraphrase but can’t quote.

Misunderstanding this can lead to unexpected results. LLMs are designed to mimic human language. If you ask for a set of URLs about a particular subject that an LLM doesn’t have access to the likelihood is that it will hallucinate - confidently answer incorrectly - with non-existent URLs.

Misconception 3

LLMs are creative

LLMs do a good job of mimicking humans. They have no creativity. In Innovation we’ve started talking about 20:60:20 where the first and last 20% requires human creativity and the middle 60% is LLM graft. They do have emergent properties but they’re not creative.

Misconception 4

LLMs are always learning

LLMs are limited to their original data-set. To “learn” they need to be retrained. The model that you’re interacting with at any given time is static. The thing that you have control over is the prompt.

Misconception 5

LLMs are all seeing

Most LLMs aren’t connected to the internet (e.g. GPT4, GPT3.5 can’t “surf” the web) and even those that are - like Bing - are using URLs for their prompt rather than training. They can’t see everything.

Misconception 6

LLMs are good in any language

LLMs are very good at English - and computer languages written in English - but they tail off dramatically in languages with fewer speakers. Given the volume of English-language data on the internet it’s likely English will continue to be lingua franca for these models.

Misconception 7

LLMs are only for generation

LLMs predict the next token in a conversation. This makes them very good at various tasks like summarisation, question-answering, reformatting, categorising and analysing

Misconception 8

LLMs are sentient

The machine doesn’t have feelings but it can do a good impression of having them because it’s mimicking human discourse. Whilst LLMs aren’t sentient the mimicry of human behaviour creates a strange dynamic where using ‘please’, ‘thank you’ and generally ‘minding your manner’ can produce better results as the machine aims to simulate how a helpful person would behave.

Misconception 9

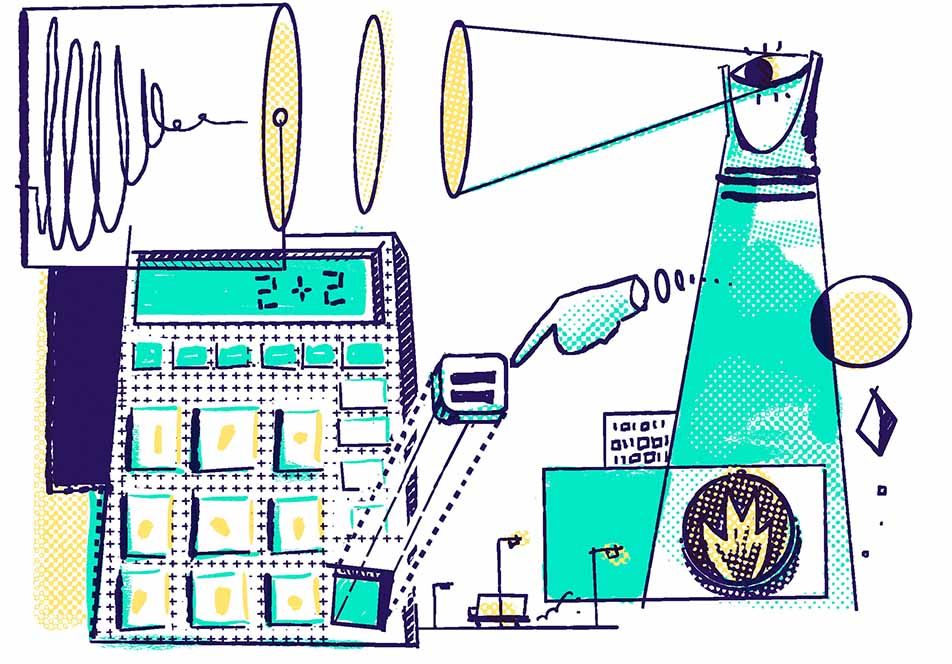

LLMs are deterministic

I’ve left the most important one for last. We’re used to machines taking 2 plus 2 and giving us 4. Since computers appeared in the 1950s they’ve always been deterministic and have followed rules that mean the same input will receive the same output. But LLMs aren’t deterministic. LLMs are stochastic. They’re completely random, just like humans, which means a deterministic process to ‘talking’ to them won’t work.

Because of these misconceptions there’s a tendency to engage with LLMs in a way that won’t necessarily get the best response. This past week we’ve been working on a new little app called The Art of the AI Prompt that we’ll release soon.

"

"

"

"